About

I have a soft spot for fictional computers, having implemented compilers, bytecode interpreters, and even program synthesis for various made-up computer architectures.

This post is yet another dive into VM implementation, looking at a particularly fascinating ecosystem from Hundred Rabbits:

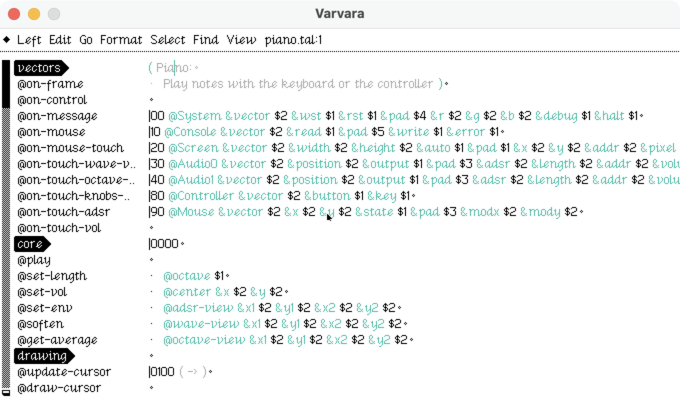

- The Uxn CPU is a simple, 256-instruction stack machine

- Varavara defines a set of peripherals that turn that CPU into an actual computer (display, keyboard, mouse, etc)

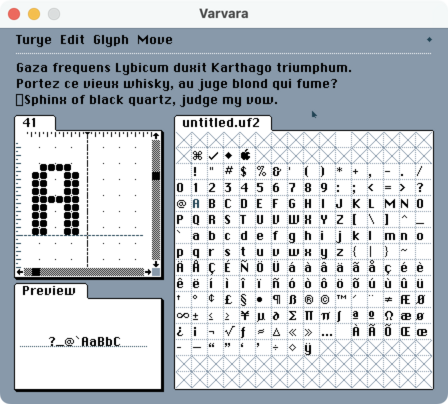

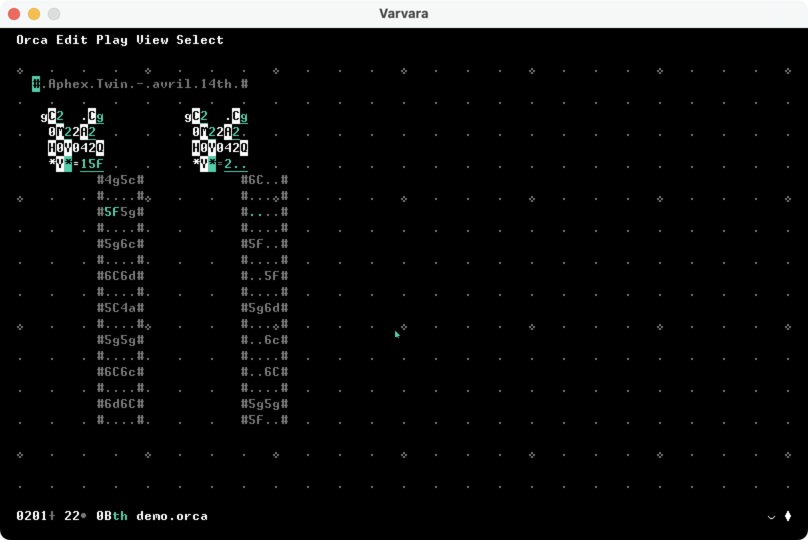

Unlike many fictional computers, the Uxn + Varvara ecosystem is sophisticated enough for actual use, and there are dozens of different ROMs – everything from text editors to drawing programs to synthesizers.

The Hundred Rabbits devlog motivates this design with far more eloquence than I could offer; I'd encourage you to browse their sprawling wiki of documentation, blog posts, and development notes.

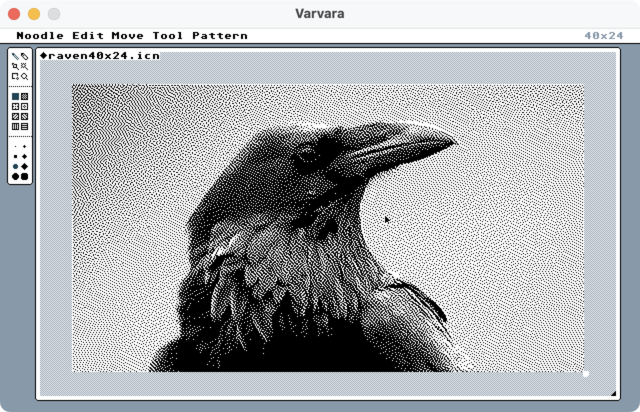

My implementation is called Raven.

It includes two library crates, and two applications:

raven-uxnimplements the CPU in safe,#[no_std]Rustraven-varvaraimplements the Varvara systemraven-cliis a CLI interface to the systemraven-guiis a full-fledged GUI, running both natively and using WebAssembly

You can try out the GUI online! It includes several example ROMs, or you can bring your own; load them by dragging-and-dropping them into the window.

The Uxn CPU

My implementation of the Uxn CPU is in the

raven-uxn crate.

It's 10-20% faster than the

reference implementation

on compute-heavy workloads, and is written in 100% safe Rust.

High performance was an explicit goal of this project, so I'm pleased with how well it turned out. I'm also pleased by the quality of the code: I didn't have to make many sacrifices to reach these speeds.

Writing a fast interpreter

The Uxn CPU has 256 different opcodes. Unlike register-based machines, the opcode fully defines behavior; there are no additional arguments in the form of which registers to modify.

This means that the simplest possible approach is shockingly effective: I wrote functions implementing each opcode, then called them in a loop.

/// Runs the VM starting at the given address until it terminates

#[inline]

pub fn run<D: Device>(&mut self, dev: &mut D, mut pc: u16) {

loop {

let op = self.ram[usize::from(pc)];

pc = pc.wrapping_add(1);

let Some(next) = self.op(op, dev, pc) else {

break;

};

pc = next;

}

}

/// Executes a single operation

#[inline]

fn op<D: Device>(&mut self, op: u8, dev: &mut D, pc: u16) -> Option<u16> {

match op {

0x00 => op::brk(self, dev, pc),

0x01 => op::inc::<0b000>(self, dev, pc),

0x02 => op::pop::<0b000>(self, dev, pc),

0x03 => op::nip::<0b000>(self, dev, pc),

0x04 => op::swp::<0b000>(self, dev, pc),

0x05 => op::rot::<0b000>(self, dev, pc),

0x06 => op::dup::<0b000>(self, dev, pc),

0x07 => op::ovr::<0b000>(self, dev, pc),

0x08 => op::equ::<0b000>(self, dev, pc),

0x09 => op::neq::<0b000>(self, dev, pc),

0x0a => op::gth::<0b000>(self, dev, pc),

0x0b => op::lth::<0b000>(self, dev, pc),

0x0c => op::jmp::<0b000>(self, dev, pc),

0x0d => op::jcn::<0b000>(self, dev, pc),

0x0e => op::jsr::<0b000>(self, dev, pc),

// ... etc

}

}

The generic arguments (0b000) represent instruction flags: most Uxn

instructions come in 8 flavors, modified by RET, KEEP, and SHORT flags.

For example, here's the implementation for inc, the increment operator:

pub fn inc<const FLAGS: u8>(

vm: &mut Uxn,

_: &mut dyn Device,

pc: u16,

) -> Option<u16> {

let mut s = vm.stack_view::<FLAGS>();

let v = s.pop();

s.push(v.wrapping_add(1));

Some(pc)

}

The call to vm.stack_view::<FLAGS>() function hides a bunch of complexity:

- Selecting either the data or return stack depending on the

RETflag - Popping or peeking depending on the

KEEPflag - Configuring to either return

u8oru16depending on theSHORTflag

This means that actual function implementation isn't burdered with special cases; the code is straight-forward, but does the right thing automatically.

Because the opcode functions are monomorphized, everything ends up inlined

into Uxn::run, which weighs in at a hefty 11.4 KiB. This sounds like a lot,

but it's only 11 instructions per opcode!

I spent a bunch of time staring at the assembly for inefficiency, filing one bug against the Rust compiler, and wrote a few small scripts to extract the implementation of individual opcodes. Here's a few annotated examples:

; INC

0x100002d4c: ldrb w8, [x25] ; load stack offset

0x100002d50: ldrb w9, [x24, x8] ; load value from stack

0x100002d54: add w9, w9, #1 ; increment

0x100002d58: strb w9, [x24, x8] ; write back to stack

0x100002d5c: b 0x100002d1c ; jump back to loop

; ADD

0x100003004: ldrb w8, [x25] ; load stack offset

0x100003008: ldrb w9, [x24, x8] ; load value from stack

0x10000300c: sub w8, w8, #1 ; decrement stack offset

0x100003010: and x10, x8, #0xff ; wrap stack offset

0x100003014: ldrb w11, [x24, x10] ; pop second value

0x100003018: add w9, w11, w9 ; perform addition

0x10000301c: b 0x1000030d0 ; unconditional branch to cleanup

0x1000030d0: strb w8, [x25] ; write modified stack offset

0x1000030d4: strb w9, [x24, x10] ; store new value in stack

0x1000030d8: b 0x100002d1c ; jump back to loop

; JMP

0x100002eac: ldrb w8, [x25] ; load stack offset

0x100002eb0: ldrsb w9, [x24, x8] ; load a *signed* byte from the stack

0x100002eb4: sub w8, w8, #1 ; decrement stack offset

0x100002eb8: strb w8, [x25] ; write modified stack offset

0x100002ebc: add w27, w27, w9 ; update program counter

0x100002ec0: b 0x100002d1c ; jump back to loop

(The full function list is here)

Most of the important values (e.g. the stack pointers and program counter) are

kept in registers, since all of the function calls have been inlined into the

body of Uxn::run.

This makes the evaluation loop blazing fast, although we can do even better with hand-written assembly (which is the subject of a different post!).

Panic safety

While reading the assembly for Uxn::run, I also audited it for panic paths.

The definition of "safe" Rust allows for panics; the most common way to add a

panic path is probably indexing (data[i]), which will panic if the index is

out of bounds.

The Uxn CPU is designed with stacks that are 256-bytes each (with an explicitly

wrapping index) and a 65536-byte RAM. This means that accessing the stack with

a u8 index, or the RAM with a u16 index, can never exceed the bounds of that

array.

By explicitly specifying array size (instead of using slices), carefully

managing index types, and using wrapping_add/sub operations, the compiler

can be persuaded to elide these checks. For example, this function has no panic

paths:

pub struct Stack {

data: [u8; 256],

index: u8,

}

impl Stack {

fn push_byte(&mut self, v: u8) {

self.index = self.index.wrapping_add(1);

self.data[usize::from(self.index)] = v;

}

}

Consistent use of these techniques means that the entire Uxn::run function

has no panic paths, last time I checked. It's hard to maintain this property

automatically – I have to disassembly and check – but I also don't expect to

change the implementation particularly often.

Attaching devices

The Uxn CPU supports two special instructions (DEI / DEO) which send and

receive bytes from "devices". These devices provide the rest of the computer,

e.g. the screen and keyboard support.

In raven-uxn, this is implemented through a trait:

/// Trait for a Uxn-compatible device

pub trait Device {

/// Performs the `DEI` operation for the given target

///

/// This function must write its output byte to `vm.dev[target]`; the CPU

/// evaluation loop will then copy this value to the stack.

fn dei(&mut self, vm: &mut Uxn, target: u8);

/// Performs the `DEO` operation on the given target

///

/// The input byte will be written to `vm.dev[target]` before this function

/// is called, and can be read by the function.

///

/// Returns `true` if the CPU should keep running, `false` if it should

/// exit.

#[must_use]

fn deo(&mut self, vm: &mut Uxn, target: u8) -> bool;

}

The opcode implementation for DEI and DEO take a trait object and call into

these functions:

impl Uxn {

/// Device Output

///

/// ```text

/// DEO val device8 --

/// ```

///

/// Writes a value to the device page. The target device might capture the

/// writing to trigger an I/O event.

#[inline]

pub fn deo<const FLAGS: u8>(

&mut self,

dev: &mut dyn Device,

pc: u16,

) -> Option<u16> {

let mut s = self.stack_view::<FLAGS>();

let i = s.pop_byte();

let mut run = true;

match s.pop() {

Value::Short(v) => {

let [lo, hi] = v.to_le_bytes();

let j = i.wrapping_add(1);

self.dev[usize::from(i)] = hi;

run &= dev.deo(self, i);

self.dev[usize::from(j)] = lo;

run &= dev.deo(self, j);

}

Value::Byte(v) => {

self.dev[usize::from(i)] = v;

run &= dev.deo(self, i);

}

}

if run {

Some(pc)

} else {

None

}

}

}

Looking at the disassembly, the compiler is smart enough to devirtualize this

call (at least in the raven-cli executable):

; DEI

0x100002fb8: ldrb w8, [x20, #520]

0x100002fbc: ldrb w21, [x24, x8]

0x100002fc0: ldr x0, [sp, #8]

0x100002fc4: mov x1, x20

0x100002fc8: mov x2, x21

0x100002fcc: bl 0x1001016f4 <<raven_varvara::Varvara as raven_uxn::Device>::dei::ha3d6786c5cc005d4>

0x100002fd0: add x8, x20, x21

0x100002fd4: ldrb w8, [x8, #8]

0x100002fd8: ldrb w9, [x20, #520]

0x100002fdc: strb w8, [x24, x9]

0x100002fe0: b 0x100002d1c

Implementing the Varvara system

Evaluating opcodes is fine and good, but the Uxn CPU has to talk to the outside

world. The Varvara system plugs into the DEI and DEO opcodes to implement

the rest of the computer, specifying seven devices: system, console,

screen, audio, controller, mouse, file, datetime.

The Uxn CPU is small and well-specified; the rest of Varvara system is nominally specified, but ground truth is given by the reference implementation.

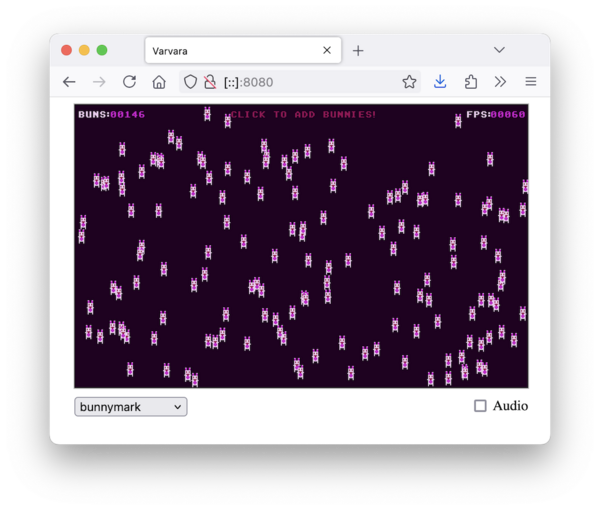

Fortunately, there are a bunch of individual ROMs to test each part of the system. This one tests blending modes:

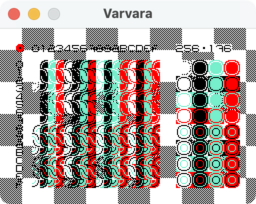

Here's one which checks auto-advance modes when drawing sprites:

![]()

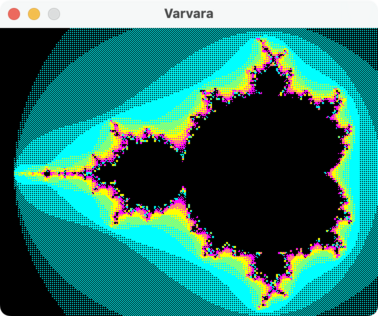

The mandelbrot example serves as a useful performance benchmark:

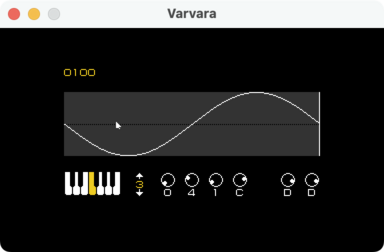

The audio device implementation is very different from the specification.

This piano was my nemesis for several days:

There's a extremely long tail of compatability! I had the basics up and running in a few days, but ironing out all of the kinks took a several weeks of nights-and-weekend project time. Even now, it's possible that it's not bug-for-bug compatible with the reference implementation.

Library design

One of the reasons it took so long was a architectural u-turn relatively late in

the game: I decided to make the raven-varvara crate framework-independent.

In today's code, raven-varvara only has a few more dependencies than

raven-uxn; notice the lack of UI framework in this list:

[dependencies]

chrono.workspace = true

log.workspace = true

static_assertions.workspace = true

zerocopy.workspace = true

uxn = { path = "../raven-uxn", package = "raven-uxn" }

Decoupling from the UI framework makes the library extremely flexible! Any UI

framework can embed a Varvara object and call its event functions, then draw

the output image that it provides.

In practice, you have to pick two main UI components:

- A windowing / event framework, which draws the screen and translates events

for

raven-varvara. - An audio library, which runs four audio channels based on stream data provided

by the library (shared with worker threads by an

Arc<Mutex<StreamData>>).

The repository includes a reference implementation, raven-gui, which uses

egui as the windowing / event framework

and cpal as the audio backend.

Flagship applications

At this point, I've tested raven-gui on basically all of the

flagship applications.

Running on the web

The dependencies for raven-gui were carefully selected for WebAssembly

support, so the GUI can be compiled and run on the web!

The only tricky part is that eframe

(the egui framework crate) expects to

own the event loop – and the entire window! I had to stray from the examples

and write my own

index.html,

along with a few hundred lines of

glue code

to integrate native HTML UI elements with the eframe application.

Acknowledgments

Example ROMs and the design of Uxn + Varvara are courtsey of 100 Rabbits / Devine Lu Livega, and were released under the MIT license.

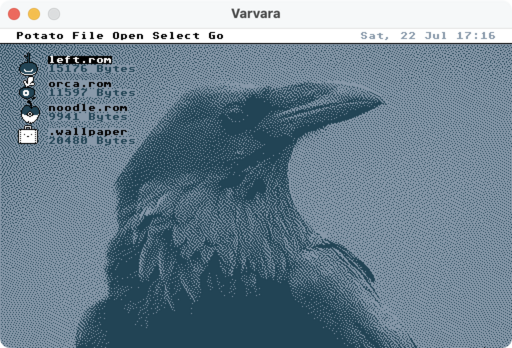

The raven photo is courtsey of the National Park Service / Kent Miller, and was dithered using this tool.